Data Mesh: A Poisoned Gift or the Key to Your Kingdom?

Data Mesh promises to eliminate silos via decentralization and domain-driven data ownership. Yet, in event-driven architectures, challenges like ambiguous ownership, governance gaps, and legacy infrastructure persist.

The Broken Promise of Data Mesh

Many organizations jump into Data Mesh hoping it will solve their data silo problems, give teams more control, and deliver faster, more scalable results. However, after spending millions, they often experience significant challenges such as broken data and failing pipelines, with teams unsure of ownership responsibilities. These issues are not just anecdotal; according to a 2022 Forrester survey, 50% of organizations implementing Data Mesh report failures in their data operations, with a mean-time-to-repair (MTTR) for failures averaging more tha 12 hours. Such inefficiencies highlight the need for careful strategic planning before embarking on a Data Mesh journey.

The problem isn’t with the idea itself. Zhamak Dehghani’s core principles are forward-thinking and important for today’s data needs. But the real challenge lies in implementation. Too often, organizations rush into Data Mesh without sufficient planning or alignment, misinterpreting its concepts and failing to adapt them to their unique business realities.

Organizations spent millions chasing the dream of faster decision-making, less reliance on a central data team, and scalable data governance. But the reality doesn't always match the promise.

Instead of eliminating silos, many organizations create more silos, smaller oner, but more, and distributed among domain teams, but no less challenging to manage. Teams struggle with communication, ownership disputes, technical complexity, and governance problems.

Beyond these implementation struggles lies another deeper issue. Many of Data Mesh’s foundational principles were built on batch processing, centralized data lakes, and analytical workflows assumptions rooted in the world of data warehousing. The modern reality, however, places rising demand on real-time data streams and architectures that must process and deliver data as it is generated, requiring immediate availability and response.

To bridge this gap between intention and evolving business realities, organizations must reconsider how Data Mesh principles can keep pace with contemporary architectural demands.

From Savior to Saboteur: The Missteps That Ruin Data Mesh

Data Mesh implementation is riddled with pitfalls stemming from poor execution, operational shortcomings, and cultural resistance. These mistakes don’t just hold companies back, they actively undermine the original vision.

Silo Replacement, Not Elimination

The decentralization promised by Data Mesh frequently results in fragmented, disconnected workflows rather than cohesive data ecosystems.

Key Failures in Silo Replacement:

- Teams often adopt incompatible tools and technologies across domains. Marketing may favor Airflow and Parquet, while sales clings to Python scripts and CSV. This inconsistency makes integration far more chaotic.

- Ownership boundaries blur. For example, critical shared elements like

customer_idused across sales, marketing, and finance are left ownerless. This lands teams in endless debates about responsibility. - Without centralized oversight, technical debt skyrockets. Teams spend more time troubleshooting integration issues than innovating.

Silent Changes with Explosive Results

Decentralized ownership exposes data workflows to silent disruptions. A team tweaking a schema within their domain can cause a chain reaction of failures elsewhere, especially if communication practices are fractured or missing.

Typical Scenario:

- Team B updates

user_idfrom an integer (INT) to a universally unique identifier (UUID). - This breaks apps and pipelines downstream that rely on the old schema—without triggering notifications, alerts, or fixes.

- What follows is a high-effort scramble by Team A, wasting hours firefighting what could have been avoided through proper data lifecycle implementation and professional collaboration.

The Mirage of Governance

Federated governance, the holy grail of Data Mesh, is often a mirage. In practice, it proves near to impossible to maintain consistent data quality or enforce cross-domain agreements without imposing operational discipline.

Governance Failures in Action:

- Teams define

user_iddifferently across domains, which introduces schema inconsistencies that block effective integration. This erodes trust in the entire data infrastructure. - Tools promising “standardized contracts” lock organizations into batch-first processing, even when their businesses demand real-time capabilities.

- A lack of rigor in governance processes creates more noise than clarity. Teams waste energy revisiting schema definitions, agreements, and failed validations repeatedly without resolution.

Why Tools Fail to Solve the Problem

The market offers many “Data Mesh tools” that claim to solve these challenges. However, no tool can solve the underlying cultural and operational misalignments. Tools tend to add additional layers to cover the broken.

Reality vs. Vendor Promises

| Vendor Promise | The Reality |

|---|---|

| Data contracts for all! | Works only in theory; outdated data workflows cripple real-time, event-driven architectures. |

| Unified data catalog! | A catalog lists conflicts, but doesn’t resolve them. |

| No vendor lock-in! | Custom schema validation and manual fixes remain unavoidable—leading to recurring technical debt. |

The issue is not the tools but the lack of alignment between the organization and the principles of Data Mesh. No tool can replace cross-team collaboration, explicit ownership responsibilities, or operational readiness.

Why Real-Time Systems Expose Data Mesh’s Gaps

Many modern businesses now depend on real-time data to drive competitive advantage. Systems like event meshes prioritize real-time processing, fast responses, and decentralized architecture. This demand for real-time capabilities highlights where Data Mesh principles, rooted in the slower paradigms of data warehousing, are falling short.

Let’s explore where these gaps show up:

Batch Thinking Conflicts with Real-Time Expectations:

- Data Mesh often relies on concepts like batch-first pipelines, where data is processed and shared in predefined intervals (e.g., hourly or nightly). This thinking works well in environments focused on analytics but fails in modern transactional systems where events need to trigger actions immediately.

- Event-driven systems demand continuous processing and instant updates. In this world, aggregations, transformations, and integration must happen dynamically, not in scheduled batches.

Data Products Struggle to Map to Events:

- Data Mesh principles emphasize the creation of data products owned by autonomous teams. But the definition of a data product is less clear in event-driven systems.

- Is a data product a Kafka topic? A stream of events? What happens when an event contains multiple pieces of transactional data that span domains?

- This ambiguity complicates how event-driven teams align with Data Mesh principles.

Cross-Domain Complexity Expands:

- In event meshes, data doesn’t just flow between domains in predictable pipelines. Events often span multiple domains in unpredictable ways.

- For example, when a user places an order, the

OrderPlacedevent might need to trigger updates in:- The Sales domain (for revenue forecasting).

- The Inventory domain (to update stock levels).

- The Shipping domain (to plan fulfillment).

- Managing these shared event dependencies requires a level of real-time coordination that traditional Data Mesh governance structures weren’t designed to handle.

Revisiting Data Mesh Principles in the Context of Events

To remain relevant in event-driven architectures, the core principles of Data Mesh must evolve. Here’s how some of the original principles can be adapted:

| Original Data Mesh Principle | Challenges in Event-Driven Context | What Needs to Change |

|---|---|---|

| Decentralized Ownership: Domains own their data as products. | Events often span multiple domains, creating ownership overlap and conflict. | Ownership must include shared event contracts with clear cross-domain accountability. |

| Data as a Product: Manage data like a valuable product. | It’s unclear how to define an “event product” (e.g., streams with dynamic schemas). | Redefine data products to better align with real-time streams and topics. |

| Federated Governance: Teams maintain decentralized policies. | Event-driven systems amplify the challenge of consistent schemas and metadata. | Implement stricter governance over real-time event schemas and lineage tracking. |

| Self-Service Infrastructure: Teams use tools to manage data. | Tools designed for batch systems (e.g., data warehouses) fail in dynamic contexts. | Adopt event-first platforms (e.g., Kafka, Pulsar) for real-time enablement. |

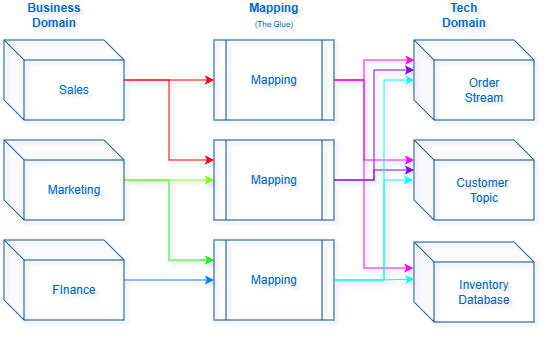

Understanding the Business and Tech Domains

Before diving deeper into this explanation, I’d like to note that I am introducing this concept as a framework that I believe holds value in addressing critical Data Mesh implementation gaps. It’s possible that others may have explored similar ideas, and I don’t aim to claim ownership or definitive originality here. My intention is purely to contribute constructively, offering a model to help organizations bridge the disconnect between business goals and technical execution

- Business Data Domains:

These represent how the business perceives its operations and processes. Each domain aligns with a real-world area of the organization, such as Sales, Marketing, Inventory, or Shipping. These domains define what’s important to the business using concepts likecustomer_id,order_status, orproduct_id. - Technical Data Domains:

These are where the business domain concepts are implemented. In technical terms, these domains deal with the storage, structure, and logic of the data—such as stream implementations, schema definitions, topic structures, and event processing pipelines.

The two must be mapped carefully. Each business concept (OrderPlaced, StockUpdated, etc.) must have a clear representation in the technical data domain so that the reality of the business can exist and operate within the technical ecosystem. Without this mapping, the glue that connects strategy with execution falls apart.

The Relationship Illustrated

The Fix: Practical Adjustments for Real Impact

Organizations don’t need to abandon Data Mesh, they need to reinvent their operational approach, aligning its principles with the realities of event-driven architectures. Event meshes and real-time processing challenge many of Data Mesh’s assumptions, but these adjustments can help bridge the gap:

The Glue Effect: Bridging Business and Tech Data Domains

One of the most critical, and often misunderstood, aspects of Data Mesh is "the glue", the joining framework that connects business data domains to technical implementations. It is this glue that ensures the organization’s data strategy aligns with its business goals while enabling decentralized architectures.

Formalize Ownership of Shared Event Streams

In an event-driven system, shared data connections like customer_id or user_id are often part of an event stream that spans multiple domains. Ownership can get foggy, leading to conflicts and operational slowdowns.

What needs to change:

- Define explicit ownership for critical event streams. For example:

- The Sales domain owns

OrderPlacedevents. - The Inventory domain owns

StockUpdatedevents.

- The Sales domain owns

- Ensure shared events used across domains (e.g.,

OrderPlacedtriggering inventory updates) have joint accountability with clear agreements about schema definitions and compatibility. - Document ownership agreements and event contracts clearly to avoid disputes around responsibilities.

Standardize the Definitions of Business Events

Without a common understanding of business events, teams often misinterpret event data or duplicate logic. In the world of event-driven architecture, defining events and their payloads consistently is crucial for collaboration.

What needs to change:

- Build a shared event dictionary that defines key events across the organization:

OrderPlaced: Includes timestamp, order ID, customer ID, and product details.StockUpdated: Includes product ID, quantity change, and warehouse location.

- Specify the schema and metadata for every event to avoid ambiguities.

- Train teams to reference the shared dictionary when producing or consuming events, ensuring consistency across domains.

Adopt Real-Time Event Processing

In many early implementations of Data Mesh, teams stick to batch processing workflows inherited from data warehouses. This approach conflicts with the dynamic nature of event-driven systems, where data streams must operate in real-time.

What needs to change:

- Transition pipelines to event-driven, real-time systems powered by platforms like Kafka, or RedPandas.

- Design workflows with event-first thinking:

- Events should trigger immediate actions (e.g., order fulfillment starting as soon as an

OrderPlacedevent is received). - Processing should happen dynamically, avoiding delays caused by batch intervals.

- Events should trigger immediate actions (e.g., order fulfillment starting as soon as an

- Implement infrastructure with support for high-speed streaming, rapid schema updates, and fault-tolerant processes.

Establish Event Contracts for Schema Changes

Event-driven architectures introduce additional complexity around schema evolution since events often flow across multiple domains with crucial dependencies. A poorly managed schema change can disrupt consumers downstream, causing failures in workflows.

What needs to change:

- Replace static schema contracts with event contracts that evolve dynamically. These contracts define the payload, metadata, and rules for compatibility as events change. For example:

- If

OrderPlacedadds a newdiscount_codefield, consumers of the event receive a notification prior to deployment. - Backward compatibility must be enforced in all schema updates to prevent breaking changes.

- If

- Automate validation processes:

- Updates to event schemas should trigger automated tests across dependent systems.

- Deployments can only proceed after downstream dependencies are validated successfully.

- Use tools like schema registries to track changes, enforce versioning, and notify consumers.

- Mapping Business Needs Properly: When mapping business concepts to technical domains, ensure there is a clear, consistent representation. A misunderstanding across teams (e.g., one viewing

order_statusas batch data and another as real-time events) can lead to failures. - Avoid Overlaps: Careful mapping allows shared concepts (e.g.,

customer_id) to exist only once in the technical layer, rather than being duplicated across systems. Tech domains must be modular and align with the ownership boundaries established in the business domains. - Maintain Consistency Across Updates: The glue must also track changes. As business needs evolve (e.g., adding a new event like

DiscountApplied), these changes need to propagate cleanly into the technical domains while remaining compatible with existing workflows.

Build Federated, Real-Time Governance

Effective governance is even harder in event-driven systems due to the decentralized nature of events. Without coordination, teams risk mismanaging schemas, losing event lineage, or duplicating work.

What needs to change:

- Create governance processes specifically designed for real-time workflows:

- Use metadata tracking tools to monitor event lineage and understand their cross-domain impacts.

- Establish responsibility matrices that define ownership and consumption rights for key event topics.

- Enforce policies for schema validation and evolution to ensure updates never disrupt downstream systems.

- Implement dashboards to visually track how events move through your architecture and identify bottlenecks in real time.

Data Mesh Isn’t the Problem,

Your Organization Is...

The blame for Data Mesh failures lies not with Zhamak Dehghani’s inspired theory but with organizations that underestimate its requirements. Without proper preparation, operational alignment, and cultural buy-in, companies set themselves up for frustration and failure.

To succeed with Data Mesh, organizations must treat it as a transformational shift, not a quick initiative. It demands cohesive teamwork, a shared language of concepts, and processes designed to sustain cross-team collaboration.

A Prerequisite for Success

Adopting Data Mesh requires readiness across people, processes, and governance. A decentralization strategy without discipline will always descend into chaos.

When a pipeline breaks or a dashboard fails, let's look past the Data Mesh label and examine how we’ve actually built it. Usually, these gaps aren't a failure of the tech, but a sign that our implementation hasn't yet fully embraced the principles that make it work. Every glitch is an opportunity to stop subverting the model and start building the environment where our tools, and our teams, can actually adapt to its demands.

Data Mesh is not a tool—it’s a mindset. Success is possible, but only if you’re ready to put in the work and the will.

Remember: Event-driven architecture isn’t a replacement for Data Mesh—it’s an evolution of its implementation. Organizations must adapt their thinking to treat events as the foundation for decisions, workflows, and strategies in the modern era.